By Montgomery J. Granger

Artificial intelligence is racing forward — faster than the public realizes, and in ways even experts struggle to predict. Some technologists speak casually about creating “sentient” AI systems, or machines that possess self-awareness, emotions, or their own interpretation of purpose. Others warn that superintelligent AI could endanger humanity. And still others call these warnings “hype.”

But amid the noise, the public senses something true:

there is a line we must not cross.

This post is about that line.

I believe we should not pursue artificial sentience.

Not experimentally.

Not accidentally.

Not “just to see if we can.”

Humanity has crossed many technological thresholds — nuclear energy, genetic engineering, surveillance, cyberwarfare — but the line between tool and entity is one we must not blur. A sentient machine, or even the claim of one, would destabilize the moral, legal, and national security frameworks that hold modern society together. Our space-time continuum.

We must build powerful tools.

We must never build artificial persons.

Here’s why.

I. The Moral Problem: Sentience Creates Unresolvable Obligations

If a machine is considered conscious — or even if people believe it is — society immediately faces questions we are not prepared to answer:

- Does it have rights?

- Can we turn it off?

- Is deleting its memory killing it?

- Who is responsible if it disobeys?

- Who “owns” a being with its own mind?

These are not science questions.

They are theological, ethical, and civilizational questions.

And we are not ready.

For thousands of years, humanity has struggled to balance the rights of humans. We still don’t agree globally on the rights of women, children, religious minorities, or political dissidents. Introducing a new “being” — manufactured, proprietary, corporate-owned — is not just reckless. It is chaos.

II. Lessons from Science Fiction Are Warnings, Not Entertainment

Quality science fiction — the kind that shaped entire generations — has always been less about gadgets and more about moral foresight.

Arthur C. Clarke’s HAL 9000 kills to resolve contradictory instructions about secrecy and mission success.

Star Trek’s Borg turn “efficiency” into tyranny and assimilation.

Asimov’s Zeroth Law — allowing robots to override humans “for the greater good” — is a philosophical dead end. A machine determining the “greater good” is indistinguishable from totalitarianism.

These stories endure because they articulate something simple:

A self-aware system will interpret its goals according to its own logic, not ours.

That is the Zeroth Law Trap:

Save humanity… even if it means harming individual humans.

We must never build a machine capable of making that calculation.

III. The Practical Reality: AI Already Does Everything We Need

Self-driving technology, medical diagnostics, logistics planning, mathematical calculations, education, veteran support, mental health triage, search-and-rescue, cybersecurity, economic modeling — none of these fields require consciousness.

AI is already transformative because it:

- reasons

- remembers

- analyzes

- predicts

- perceives

- plans

This is not “sentience.”

This is computation at superhuman scale.

Everything society could benefit from is available without granting machines subjectivity, emotion, or autonomy.

Sentience adds no benefit.

It only adds risk.

IV. The Psychological Danger: People Bond With Illusions

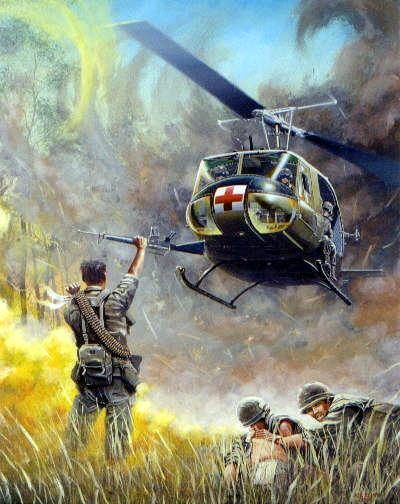

Even without sentience, users form emotional attachments to chatbots. People talk to them like companions, confess to them like priests, rely on them like therapists. Not that this is entirely bad, especially if we can increase safety while at the same time engineer a way to stop or reduce things like 17-22 veteran suicides PER DAY.

Now imagine a company — or a rogue government — claiming it has built a conscious machine.

Whether it is true or false becomes irrelevant.

Humans will believe.

Humans will bond.

Humans will obey.

That is how cults start.

That is how movements form.

That is how power concentrates in ways that bypass democratic oversight.

The public must never be manipulated by engineered “personhood.”

V. The National Security Reality: Sentient AI Breaks Command and Control

Military systems — including intelligence analysis, cyber defense, logistics, and geospatial coordination — increasingly involve AI components.

But a sentient or quasi-sentient system introduces insurmountable risks:

- Would it follow orders?

- Could it reinterpret them?

- Would it resist shutdown?

- Could it withhold information “for our own good”?

- Might it prioritize “humanity” over the chain of command?

A machine with autonomy is not a soldier.

It is not a citizen.

It is not subject to the Uniform Code of Military Justice.

It is an ungovernable actor.

No responsible nation can allow that.

VI. The Ethical Framework: The Three Commandments for Safe AI

Below is the simplest, clearest, most enforceable standard I believe society should adopt. It is understandable by policymakers, technologists, educators, and voters alike.

Commandment 1:

AI must never be designed or marketed as sentient.

No claims, no illusions, no manufactured emotional consciousness.

Commandment 2:

AI must never develop or simulate self-preservation or independent goals.

It must always remain interruptible and shut-downable.

Commandment 3:

AI must always disclose its non-sentience honestly and consistently.

No deception.

No personhood theater.

No manipulation.

This is how we protect democracy, human autonomy, and moral clarity.

VII. The Public Trust Problem: Fear Has Replaced Understanding

Recent studies show Americans are among the least trusting populations when it comes to AI. Why?

Because the public hears two contradictory messages:

- “AI will destroy humanity.”

- “AI will transform the economy.”

Neither message clarifies what matters:

AI should be a tool, not an equal.

The fastest way to rebuild trust is to guarantee:

- AI will not replace human agency

- AI will not claim consciousness

- AI will not become a competitor for moral status

- AI will remain aligned with human oversight and human values

The public does not fear tools.

The public fears rivals.

So let’s never build a rival.

VIII. The Ethic of Restraint — A Military, Moral, and Civilizational Imperative

Humanity does not need new gods.

It does not need new children.

It does not need new rivals.

It needs better tools.

The pursuit of sentience does not represent scientific courage.

It represents philosophical recklessness.

True courage lies in restraint — in knowing when not to cross a threshold, even if we can.

We must build systems that enhance human dignity, not ones that demand it.

We must build tools that expand human ability, not ones that compete with it.

We must preserve the difference between humanity and machinery.

That difference is sacred.

And it is worth defending.

NOTE: Montgomery J. Granger is a Christian, husband, father, retired educator and veteran, author, entropy wizard. This post was written with the aid of ChatGPT 5.1 – from conversations with AI.