“Education is risky, for it fuels the sense of possibility.” – Jerome Bruner, The Culture of Education

When I was in high school in Southern California in the late 1970s, our comprehensive public school wasn’t just a place to learn algebra and English. We had a working restaurant on campus. Students could take auto body and engine repair, beauty culture, metal shop, wood shop, and even agriculture, complete with a working farm. We were being prepared for the real world, not just for college entrance exams. We learned skills, trades, teamwork, and the value of hands-on learning.

“Kids LOVE it when you teach them how to DO something. Let them fail, let them succeed, but let them DO.” – M. J. Granger

That’s why it baffles me that in 2025, when technology has made it easier than ever to access knowledge, communicate across time zones, and develop new skills instantly, there are governors and education officials banning the very tools that make this possible: smart phones and artificial intelligence.

“Remember your favorite teacher? Did they make you feel special, loved and smart? What’s wrong with that?” – M. J. Granger

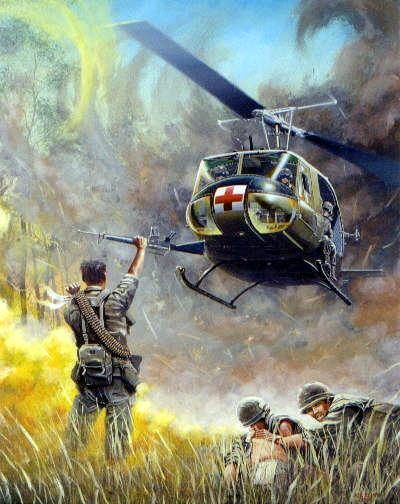

Let me be clear. I’m a father, a veteran, a retired school administrator, and an advocate for practical education. And I’m deeply disappointed in the decision to ban smart phones in New York schools. Not just because it feels like a step backward, but because it betrays a fundamental misunderstanding of what education should be about: preparing students for life.

“No matter the tool, stay focused on the reason for it.” – M. J. Granger

Banning tools because some students might use them inappropriately is like banning pencils because they can be used to doodle. The answer isn’t prohibition; it’s instruction. Teach students how to use these tools ethically, productively, and critically. Train teachers to guide students in responsible digital citizenship. Let schools lead, not lag, in the responsible integration of tech.

“If every teacher taught each lesson as if it were their last, how much more would students learn?” – M. J. Granger

Smartphones can be life-saving devices in school emergencies. Police agencies often recommend students carry phones, especially in the case of active shooter incidents. Beyond that, they can be used for research, translation, organization, photography, collaboration, note-taking, recording lectures, and yes, leveraging AI to improve writing, problem-solving, and creativity.

“I feel successful as an educator when, at the end of a lesson, my students can say, ‘I did it myself.’” – M. J. Granger

When calculators came on the scene, some claimed they would “ruin math.” When spellcheck arrived, people worried it would erode literacy. When the dictionary was first widely available, no one insisted on a footnote saying, “This essay was written with help from Merriam-Webster.” It was understood: the dictionary is a tool. So is AI. So are smart phones. And so is the ability to evaluate when and how to use each one.

“Accountability, rigor, and a good sense of humor are essentials of quality teaching.” – M. J. Granger

In the real world, results matter. Employers care about the quality and timeliness of the work, not whether it was handwritten or typed, calculated with long division or a spreadsheet. Tools matter. And the future belongs to those who can master them.

“Eliminate ‘TRY’ from your vocabulary; substitute ‘DO’ and then see how much more you accomplish.” – M. J. Granger

The AI revolution isn’t coming—it’s already here. With an estimated 300 to 500 new AI tools launching every month and over 11,000 AI-related job postings in the U.S. alone, the landscape of education and employment is evolving at breakneck speed. From personalized tutoring apps to advanced coding copilots, the innovation pipeline is overflowing. Meanwhile, employers across nearly every industry are urgently seeking candidates with AI fluency, making it clear that today’s students must be equipped with the skills and mindset to thrive in a world powered by artificial intelligence. Ignoring these trends in education is not just shortsighted—it’s a disservice to the next generation.

“If you fail to plan, you plan to fail.” – Benjamin Franklin

If we are serious about closing the opportunity gap, about keeping our students safe, about equipping them for a global workforce driven by rapid innovation — then the solution is not to lock away the tools of the future, but to teach students how to use them.

“To reach the stars sometimes you have to leave your feet.” – M. J. Granger

The future is now. Let’s stop banning progress, and start preparing for it.

Montgomery Granger is 36 years retired educator, with a BS Ed. from the University of Alabama (1985), MA in Curriculum and Teaching from Teachers College – Columbia University (1986), and School District Administrator (SDA) certification through The State University of New York at Stony Brook (2000).

NOTE: This blog post was written with the assistance of ChatGPT 4o.